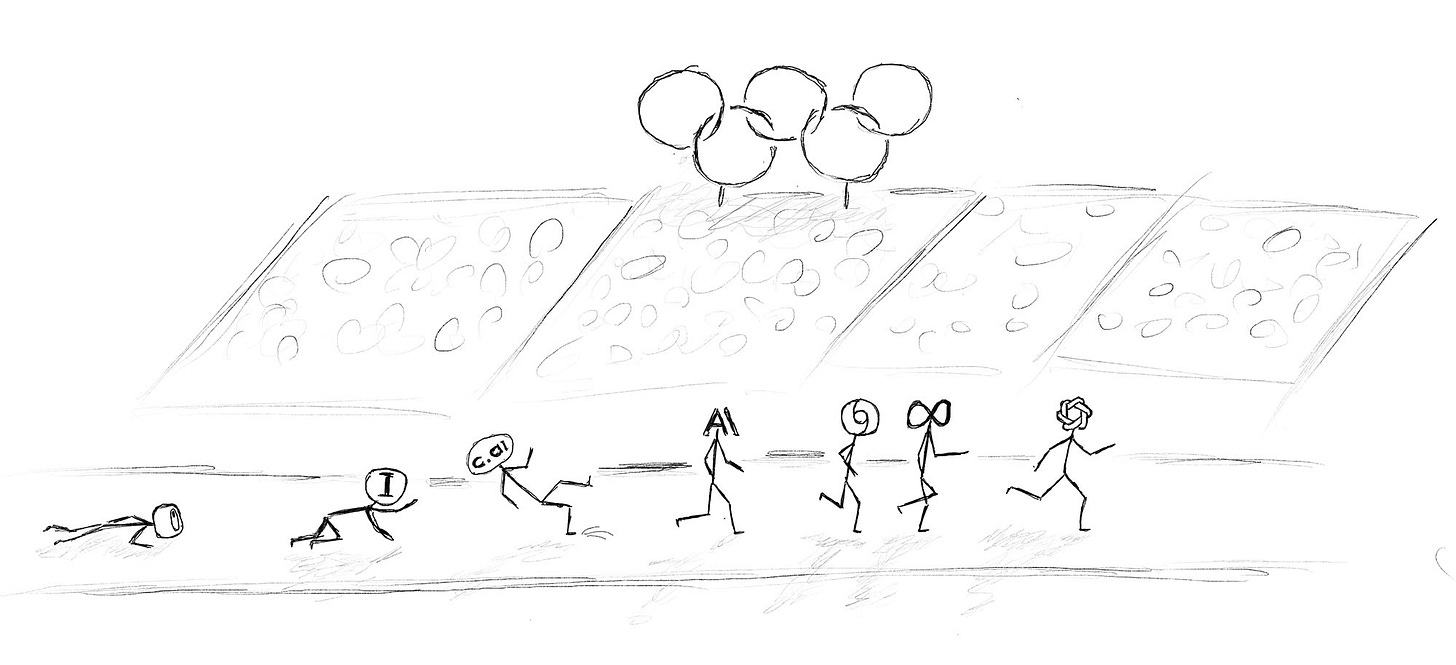

AI at the intersection?

Mid-year look at AI

Earlier this year, I wrote my predictions for AI in 2024, including the following: "What is here today and working is to stay, and there is not much new coming beyond that very soon." Many things have happened since then. Let's see where things stand in the middle of the year, what can be coming next, and what is limiting the progress of current technology.

Flattening in progress

In January, I predicted that we have mostly flattened out. While incremental improvements to model speed, accuracy, and cost of existing models will be made, this will unlikely unlock new fundamental applications. The rest of the field will also catch up with OpenAI.

It seems to me that this prediction is mostly true today. Let's look at a few stats:

All Google Gemini 1.5 Pro, Meta LLama 3.1, and Anthropic Calude 3.5 now perform similarly to GPT4. xAI Grok is also quickly catching up.

On the contrary, the actual raw performance of GPT4 and GPT 4o has not increased much and has remained the same over the last six months.

Cost and speed per token, though, became cheaper and faster.

Much of this is now open-source, thanks to Meta's efforts, and you can run it on-premises.

All this is great for developers. If there was something you could build 6 months ago, now you can make it better and cheaper than ever. Indeed, many people did exactly that. Major productivity apps, such as Gmail, Slack, and MS Office, now have small 2023-like AI features sprinkled throughout. They help you find things, summarise content, or provide suggestions.

Despite this, more is needed to unlock fundamental AI applications and drive enough revenues. Over the last few weeks, the trend has been to scrutinise the spending of significant providers on AI while questioning its impact on revenues. An excellent article, "AI’s $600B question," from Sequoia, nicely presents this point.

New physical interfaces didn't fare much better. The flop of startups like Humane or Rabbit shows limitations of HW product usability built on existing technology. While this could have been predicted, I see their case strangely similar to one of General Magic many years ago and Magic Leap more recently. As in the case of all of them, I think the issue is generally about timing, technology readiness, and commercialisation strategy rather than the idea itself. iPhone eventually happened. Apple and Meta aim to offer a more viable route to wearable AI assistants and AR glasses. Let's see whether they get it right.

Part of commoditisation is the consolidation of independent AI labs. It is happening in an entirely unexpected way. It used to be the case that if you could not stay competitive as a startup, you went bankrupt or got acquired. Now, the default exit for AI startups like Inflection.AI, Adept AI and Character AI seems to be being "hollowed out". Leadership and the team join a big company while the shell of the original company is left to continue. It's an innovation, but one to avoid regulation.

Surprises

The above could suggest that we have indeed flattened out. However, that's not a complete story, and there are reasons to stay optimistic, too.

Sora surprised me. I thought the capability of generating high-fidelity videos was two years out. It is happening already today. I have yet to use it, but some examples are truly impressive.

Another hope on the horizon is new datasets. In my January predictions, I mentioned that new datasets might arise capturing humans' daily use of computers. It would unlock the ability to train LLM Agents to perform these tasks on their own. I didn't know how such datasets could be collected and raised likely privacy issues.

Microsoft, with its Recall, seems to be aiming for precisely this. It implicitly collects this dataset in the background to offer a search over your activity. The same dataset can be used to train and automate the same tasks in the future. Unsurprisingly, it immediately raised the abovementioned privacy concerns. Now, Apple, with its integrated Apple Intelligence, is aiming for the same goal but from a slightly different angle. In both cases, you need control at the OS level. These are the only two companies that can pull this off. The exception might be Google, which could embed similar functionality directly into Google Chrome.

The bull and bear case for AI

AI will eventually be more significant than anything else. The question is how long it will take. Does it take 1, 5, 10 or 100 years? What determines how quickly it will happen?

I continue to believe the key reason more progress was not made this year is the lack of further fundamental scientific improvements in the underlying technology. You just can't build many AI applications people are excited about with today's technology. You can’t build agents that work very well or robots that can accomplish medium-difficulty tasks. Current technology is also no secret anymore. All leading LLM models across big tech are built more or less the same way.

Given this, I think there are two possible ways for the future to unfold. It depends on whether further technological breakthroughs happen very soon or not.

The bear case

Due to the lack of fundamental technology breakthroughs in the coming years, LLMs will keep improving incrementally by 20% YoY (but not 10x YoY), and the field will further commoditise. Their applications will slowly increase across existing products through incremental features.

Any new AI-first product from 2024 onwards will be comparatively rare and require a unique integration of product experience that efficiently leverages existing technology instead of creating a new one.

Public company stocks will normalise to reflect this outlook. Many startups built on the premise of radically better AI becoming available very soon will go bankrupt or need to find a different path forward. By very soon, I mean 1-3 years, which is the average runway of a venture-backed startup. On the other hand, applications that have solid user traction and leverage existing AI will only work better.

The bull case

New AI technological breakthroughs will come in the next 1-3 years. They will increase the applicability of the technology 10x and unlock more value creation, propelling the industry's growth. The number of people working on AI is now higher than ever and rewards are more significant than ever. They might be due to algorithmic Improvements, increases in scale, new kinds of datasets, integrations, or new HW platforms (AR glasses, robots).

In recent years, much of the investment has focused on preparing for the bull case. You just can't miss it if you are an investor or a big company. Nobody wants to be a Nokia when the iPhone arrives. It's entirely possible, though, that the reality will resemble the bear case. I think it’s better to be prepared for both.

All I’ve been reading these days is the godfather of AI Geoffrey Hinton AI will take over the world and we’re in big trouble. On the flipside Sam Altman says use natural language now you can build any app you want without doing anything which would be cool since I have a few ideas.